Most AI delivery failures today aren’t model failures; they’re architecture and process failures upstream of the model.

Executive summary

- As LLMs improve, prompting becomes less differentiated – while data control, evaluation, and system design become the real sources of risk (and advantage).

- Teams that treat context as a first-class product ship more reliably and adapt faster as models change.

- The practical takeaway: own the pipeline, not just the model, or expect surprises in production.

Context is the product – not the prompt

Over the last year, model capability has advanced faster than most organizations can operationalize. Larger context windows, better reasoning, and falling inference costs have made it easier than ever to ship an AI demo.

Yet many production systems still fail in predictable ways:

- Incorrect or stale answers that are hard to trace

- Latency spikes caused by tool-heavy agent workflows

- Inconsistent outputs across similar queries

- Limited ability to explain why the system responded the way it did

These are rarely caused by the model itself.

They are usually caused by weak control over context.

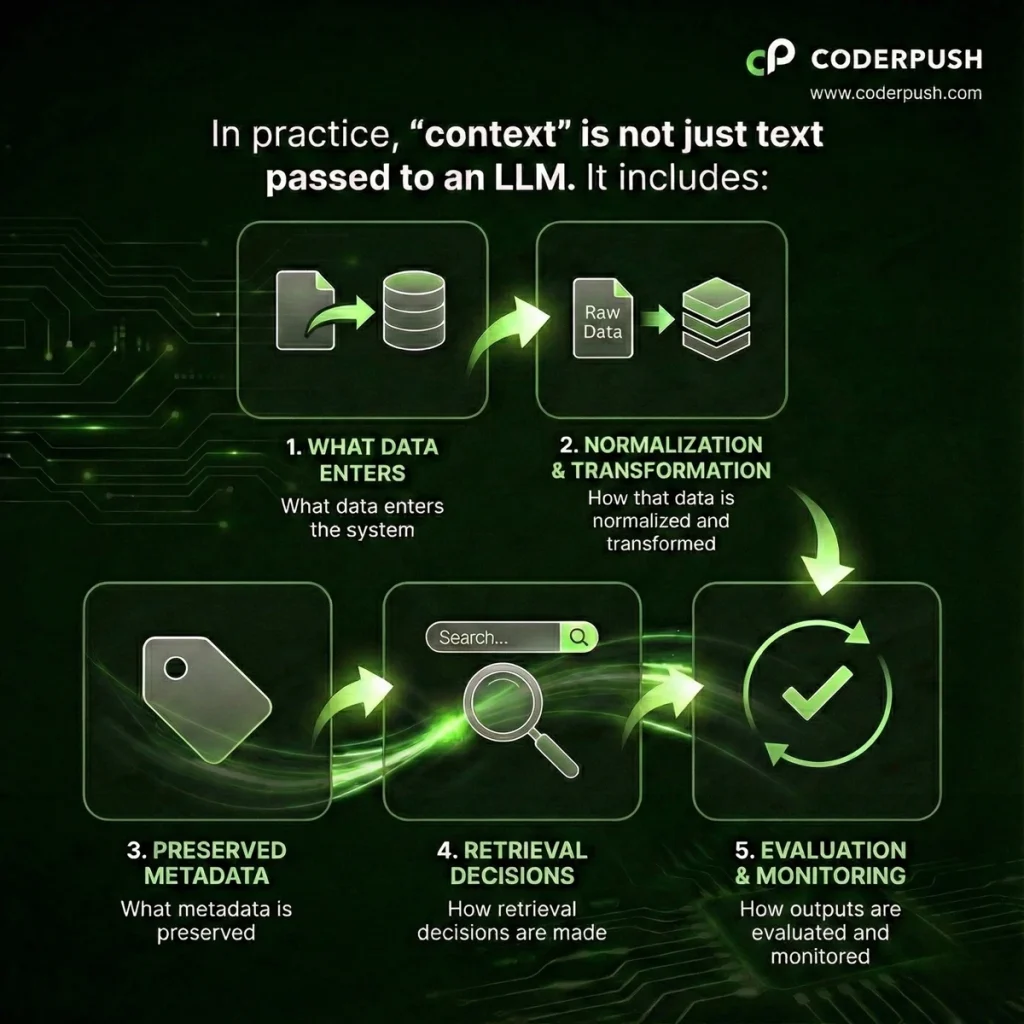

In practice, “context” is not just text passed to an LLM. It includes:

A practitioner’s view from the field

From hands-on delivery work, a few patterns show up consistently.

1. Normalization beats improvisation

Systems that introduce a clear normalization layer upstream of the model behave more predictably. Structured APIs reduce parsing ambiguity and make downstream evaluation possible. When every request is “free-form,” errors multiply quietly.

2. Metadata-aware retrieval matters

Letting agents access both raw inputs and derived indicators – with metadata intact – improves accuracy and debuggability. When something goes wrong, teams can trace which data influenced the answer, not just what the answer was.

3. Skills and tools are a trade-off, not a free win

Tool-based “skills” can be powerful for deep analysis and research workflows. They are often the wrong fit for fast, user-facing Q&A. Each tool call adds orchestration overhead and latency. Not every workflow benefits from maximal agency.

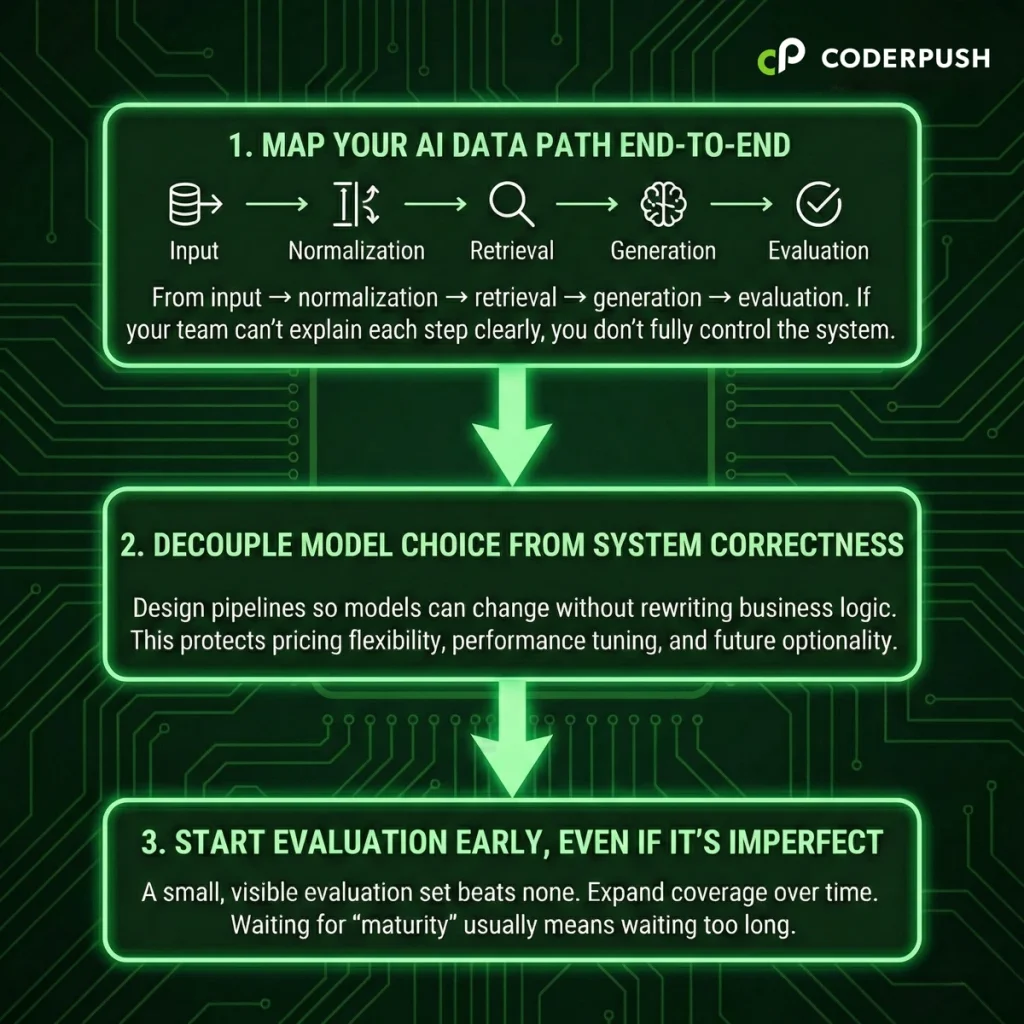

4. Scaffolding doesn’t disappear — it evolves

Better models reduce the need for overly complex prompts, but they don’t eliminate the need for process control. Treating the LLM as a replaceable component — or even as a judge within a broader workflow — preserves flexibility as models change.

5. Evaluation is still under-invested

Even lightweight evaluation (small test sets, basic CI checks, non-blocking initially) surfaces issues teams would otherwise miss. Perfect evaluation isn’t required to start. Visibility is.

None of this is about being “cutting-edge.”

It’s about not being surprised once real users arrive.

What leaders should do next?

If you’re responsible for AI delivery, not just experimentation, three actions matter more than most framework choices:

A final thought

Models will continue to improve.

What differentiates teams isn’t access to better models: it’s how well they prepare, constrain, and observe them.

If you’re moving beyond demos and into real usage, especially where accuracy, latency, or governance matter, it’s worth pressure-testing the architecture before scaling.

We’re always open to sharing what we’ve learned building AI systems under real-world constraints.